Apple’s 2023 Worldwide Developers Conference kicked off today at 10 a.m. Pacific time, with a keynote packed with product announcements such as the M2 Ultra in an updated Mac Studio and a new Mac Pro. The most exciting news, though, is that Cupertino took the opportunity to announce its new headset, the Vision Pro.Rumors that have been circulating for months appear to be true most That’s true, but in true Apple fashion, the most interesting aspect is what remains in the software.

The Vision Pro is Apple’s first augmented reality device, or in the company’s parlance, a spatial computer. The idea is that instead of looking at the display like a typical VR headset, users would put on the Vision Pro and look through the lens, still seeing the surrounding area with the user interface overlaid on top, not unlike Microsoft’s current HoloLens 2 AR The products are no different. Apple has been pushing augmented reality technology for years as part of iOS and iPadOS, and companies have branched out into the space, perhaps with Pokémon Go being the most high-profile example.

Apple’s AR operating system: visionOS

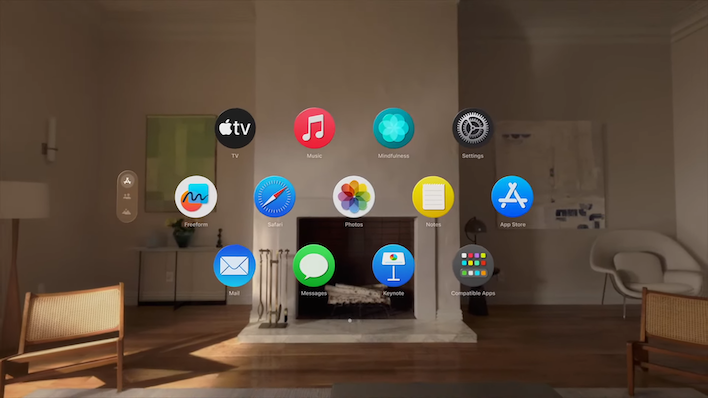

The home interface looks like a larger version of the Apple Watch app launcher, and Dye said it actually makes it feel like the UI is part of the room. UI elements have depth, respond to light, and cast shadows to imply the scale and distance of elements in a room. Voice control includes dictation and talking to Siri to open and close apps. While we haven’t tried it ourselves yet, the demo seemed pretty smooth. The only thing we’re not entirely convinced about is the virtual keyboard, but Apple says the Vision Pro is compatible with Apple’s Bluetooth devices like the Magic Keyboard and Magic Trackpad; hopefully this is just their way of saying it supports standard Bluetooth accessories, like in iPadOS and Same on macOS. Of course, you can control your Mac at resolutions up to 4K just by looking at the Vision Pro and placing its screen wherever you want in the room.

Apple said it applied for more than 5,000 patents during the development cycle of Vision Pro and VisionOS. Dye says the entire VisionOS experience feels like “magic,” but that word gets thrown around so much that we’re sure that’s more the result of a lot of new research than magic. Still, visionOS looked pretty polished in the demo, as apps effortlessly swiped left and right to make room for newly opened apps, filling in the space around them as users navigate the system. Speaking of navigation, Apple’s demo included people walking around a room wearing Vision Pro headsets, a handy byproduct of the device’s AR focus.

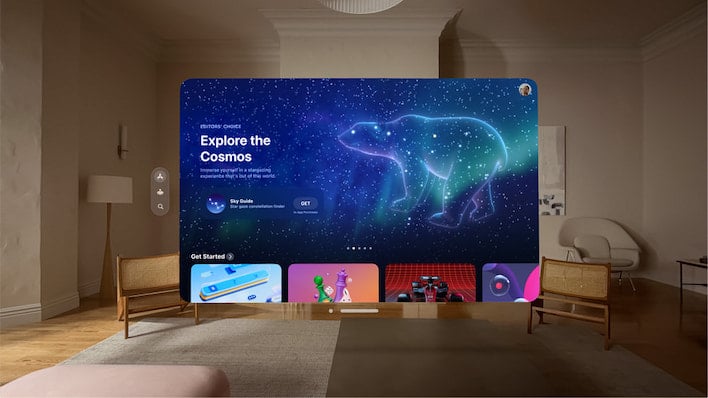

Apps, or “experiences” as Apple calls them, are not tied to a display; they can adjust to any virtual size or position around the user. Apps don’t delete each other, they just take up different spaces in the room. Environments allow user interfaces to expand beyond the dimensions of a room and achieve an immersive feel almost anywhere. Want to watch Ted Russo from somewhere on the lakeshore? Obviously, this is a choice. The Digital Crown (another Apple Watch feature borrowed from the Vision Pro) lets users simply dial how They immerse themselves in it. Landscapes are shot volumetrically, so there’s no flat photo motion effect that often occurs with Facebook 3D photos.

While most headsets require at least a little extra hardware to track hands, heads and users in a room, Apple’s input design goals are based on not using any additional hardware. Users can control it simply using eye movements, gestures and voice commands, Day said. Eye-tracking controls appear to essentially mimic mouse hover or cursor position, a concept we used in the Alienware m15 R2 a few years ago, although Apple showed off a number of sensors around the Vision Pro’s eyes to improve accuracy. Flick to scroll, or pinch to select objects. The interface seems very intuitive and simple, two words that are often used to describe Apple’s user interface efforts. Everything seems very refined.

Unlike VR headsets, which must isolate the user from the world around them, the Vision Pro has features that allow the user to continue living in the real world. This goes for both the user and those around them; anyone who’s ever been frightened or pranked while wearing headphones can attest to this. Vision Pro users can see people when they’re around them, and with an outward-facing display called EyeSight, others can see the eyes underneath the headset. EyeSight can also provide cues to others, showing them how focused you are when you’re busy. Additionally, when people are nearby, they appear in the immersive view. Every little detail here shows that Apple has been through a lot of scenarios, and suddenly the reported long-term investment starts to make sense.

The included apps are the same basic apps available on all other Apple devices. Browse the web in Safari, send and receive text messages and iMessages, make calls using FaceTime, and automatically sync all your data with iCloud. Spatial audio and life-size FaceTime calls also seem like great ways to blend in with other people. Applications aren’t just two-dimensional, either; Apple’s demo included receiving a 3D model attached to a message in the Messages app and pulling it out to view a wireframe on a table in the same room.If this isn’t some madman’s promise minority report– Just type something in there, and the author doesn’t know what it is.

Apple Vision Pro: M2 and 23 megapixels

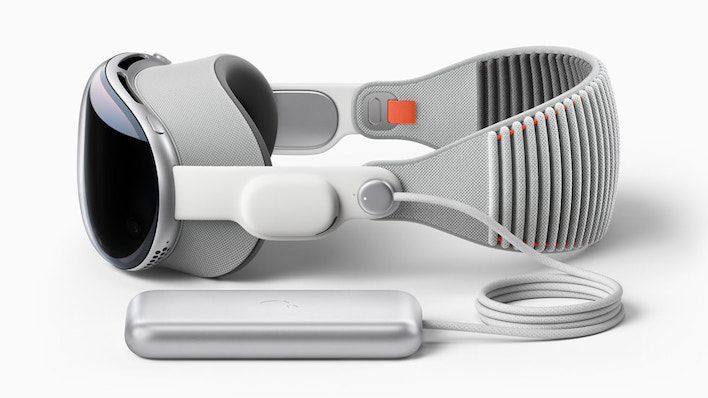

As for the Vision Pro itself, the hardware looks pretty nice. The casing is made from a large aluminum plate and has a modular design that takes into account various head sizes and face shapes. The headband features a 3D-knitted one-piece design designed to hold the main display and its flexible face mount close to the user’s eyes, with the integrated audio solution strapped to the temples. While the default configuration doesn’t make room for glasses, Apple partnered with Zeiss to create magnetic prescription lenses.

The display itself is a micro-OLED Apple Silicon backplane with an RGB arrangement where red sub-pixels sit horizontally above green sub-pixels. These OLED pixels are placed on an Apple-designed backplane, and one of its in-house developed controllers runs the display. Mike Rockwell, vice president of Apple’s technology development group, said there are 64 pixels in the same space as iPhone pixels, but the iPhone’s pixel density is different. The Vision Pro has a pair of “postage stamp-sized” displays, totaling 23 megapixels. That’s more than a 4K monitor’s roughly 8.3 megapixels per eye, so it’s very dense and supports 4K HDR video playback. All of this is viewed through a pair of three-element lenses.

Rockwell said Apple wants the audio to sound like it’s coming from the room itself, not the headphones. As such, the audio setup looks equally complex, with a pair of “audio pods” that beam sound not directly to the ears, but around the user. Spatial audio uses a proprietary technology from Apple called audio ray tracing, which includes sensors to read the room around the user and adjust playback to match the environment. The result, Rockwell said, is that the user feels the sound is coming from the environment. There are many other sensors built into the system for head and hand tracking, infrared illuminators, downward and side facing cameras, lidar, and more.

Needless to say, all those sensors and high-quality interfaces require a lot of computing power, and for that, Apple equipped the Vision Pro with an M2 Arm64-based SoC. The low-power processors in the Mac mini, MacBook Pro 13″, and MacBook Air handle most of the computing needs. Running in parallel is a new chip called R1, which handles all real-time sensor input. More than a dozen cameras, Five sensors and six microphones. Rockwell says R1 is necessary to keep response times low enough for sustained use, and the combination of M2 and R1 can bring new images to the display in just 12 milliseconds.

Being a first-generation device, early users had to put up with some clunky aspects, particularly with how the device drew power. Apple CEO Tim Cook talks about the aluminum tethered battery pack like it’s some kind of fashion accessory, but it means users will have to deal with wires. Whenever users put on the headphones, they will feel the braid-covered wires. Since the battery isn’t integrated into the headset, the Vision Pro is lighter than other headsets, which we don’t mind.

The biggest drawback to the battery case is that Apple chose not to make the battery larger; currently, the company says users can expect runtime of around two hours. The battery size is obviously a result of form over function, as Apple says it’s designed to be portable. Cook touted the entertainment interface, saying users would be immersed in movies and sporting events, but two hours wouldn’t be enough for either. Most users will likely want to use the Vision Pro while plugged in for only brief periods of cordless activity, and the device does support such a configuration.

Vision Pro looks to be a very flexible and intuitive piece of kit with huge potential, but all this technology doesn’t come cheap. Apple said the Vision Pro will cost $3,499 when it goes on sale. That’s a lot of money, but for perspective, it’s the same price as the base model of Microsoft’s HoloLens 2. HoloLens is a business-focused product, and Apple appears to be targeting deep-pocketed consumers who are already invested in the fruity ecosystem. Will it sell? We’ll just have to find out; the Vision Pro will be available early next year.